Understanding the Capabilities and Limitations of LLMs in Radiology

Large language models in radiology are promising yet face barriers to their wider adoption

Since its public debut in 2022, ChatGPT has ushered AI into virtually every aspect of modern life. You can use the generative chatbot to plan a European vacation, solve complex math equations, write your resume, or turn yourself into an AI-generated action figure.

Researchers and commercial developers are adapting large language models (LLMs) trained to understand and generate human-like content—for a range of uses in radiology. Promising applications include clinical decision support, patient and medical education, literature reviews and automated data extraction.

"There are so many exciting things happening in the radiology space, although they're not widely adaptable at the moment," said Theodore T. Kim, MD, a fourth-year medical student at George Washington University in Washington, D.C.

Dr. Kim, who will be at Beth Israel Deaconess Hospital in Boston for his radiology residency, authored a RadioGraphics article on optimizing and managing the pitfalls of LLMs. "LLMs show great promise, especially when extended by other traditional software tools," he said.

Dr. Kim’s primer on LLMs is essential reading for radiologists who need to prepare for AI’s expanding role in radiology research and clinical practice.

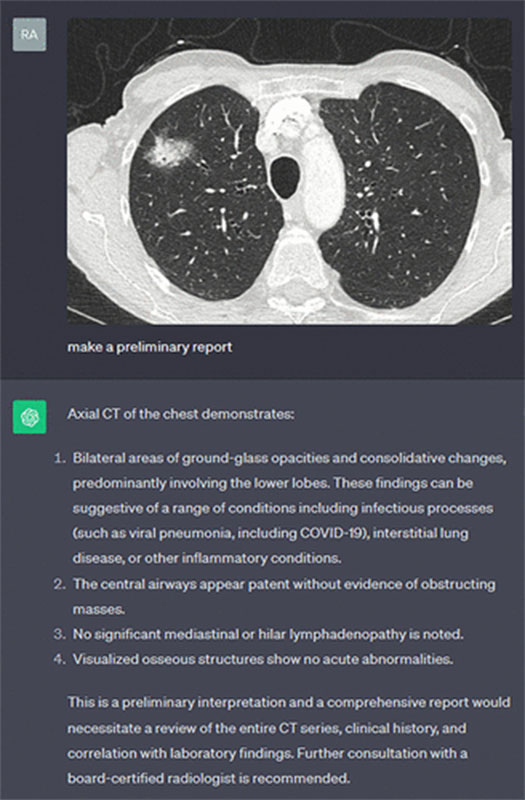

Real-life demonstration of GPT-4 Vision (https://openai.com/index/gpt-4/) used to generate a preliminary radiology report from an image of a CT scan of the chest. Of note, this example simply serves to highlight the potential of large multimodal modalities and not necessarily the correctness of the response.

https://pubs.rsna.org/doi/10.1148/rg.240073 ©RSNA 2025

Becoming AI Literate

"We need some basic level of AI literacy," said John Mongan, MD, PhD, associate chair of translational informatics, University of California, San Francisco, and chair of the RSNA Artificial Intelligence (AI) Committee. "We must have a framework to be able to ask questions of vendors and understand the answers."

LLMs like GPT-4, Claude and Gemini are trained on massive text datasets to engage in dialogue with users. They generate conversational responses by predicting the next word in a sentence based on previous context.

"If you've provided a picture of a patient and it knows that 99 out of 100 other patients are similar, it tends to fill in the blanks with what's plausible," Dr. Mongan said.

This probabilistic nature of LLMs is a big challenge for developers, especially when designing products for medical use, which must perform reliably across all types of patient data and clinical situations.

Because many of these tools may not ultimately be evaluated by the U.S. Food and Drug Administration (FDA), radiologists must know how to critically evaluate LLMs' strengths and weaknesses.

"There are some really impressive product demos out there,” Dr. Mongan said. “But questions remain about how to safely incorporate LLMs into practice in a way that saves time and improves efficiency.”

"LLMs show promise, especially when extended by other traditional software tools, but substantial work remains to address consistency, accuracy, hallucinations, bias, security, and privacy issues. I think these are difficult but solvable problems."

— JOHN MONGAN, MD, PHD

Barriers to Adoption

Despite their promise, LLMs face significant barriers before they can be widely adopted in radiology. Current limitations of LLM-type tools include:

- A tendency to 'hallucinate' or produce false, nonsensical or irrelevant information.

- A lack of transparency in how models were trained.

- Potential biases and inaccuracies within training datasets.

"Many companies are developing tools to summarize clinical notes, so it's likely to be one of the first places where we'll see clinicians interfacing with LLMs," Dr. Mongan said. "But a major barrier is the difficulty in guaranteeing they’re not fabricating information—or missing something important.”

If undetected, errors can potentially result in harmful and even catastrophic consequences for a patient's care.

"LLMs show promise, especially when extended by other traditional software tools, but substantial work remains to address consistency, accuracy, hallucinations, bias, security, and privacy issues," Dr. Mongan said. "I think these are difficult but solvable problems."

He noted that there are some LLM-based solutions that are ready now, such as auto-generated report impressions based on the radiologist’s findings, and ambient dictation systems that convert stream-of-consciousness speech into structured reports, where it’s relatively easy for the radiologist to detect omissions or hallucinations.

“Other use cases like report/note summarization, where it’s not easy or efficient for the radiologist to detect errors, may need an additional year or two to achieve a good risk/benefit balance,” Dr. Mongan said.

Critical Evaluation & Implementation

Before implementing AI software, radiologists should ask vendors about their testing methods and whether they published results in peer-reviewed journals.

"We should be pushing the vendors to do rigorous evaluations and to be transparent," Dr. Mongan said.

Successful integration also requires workflow consideration. AI tools aren’t just algorithms—they’re systems that must fit into existing radiology environments. "You can have substantially different risk-benefit profiles based on how it's integrated," Dr. Mongan said. "Part of the conversation should be how we maximize benefits and mitigate risks."

In large institutions, a radiology informaticist may lead the integration process. Smaller practices may follow an established model or template.

Playing with AI

One of the best ways to understand the capabilities—and limitations—of LLMs is to try them out.

Currently, only academic or enterprise users with approved contracts can use protected health information data LLMs like GPT-4 in a HIPAA-compliant way. But for exploration purposes, fake case studies or simulated clinical scenarios can be safely input into publicly available tools.

"If you don't have access to these HIPAA-compliant options or a proprietary system, you can sit down with ChatGPT or Claude and input publicly available medical images from open access journals," Dr. Kim said. "Upload a fake radiology report you’ve created on your own and ask the model to generate an impression or full report. You may be surprised at what it picks up."

Tools like prompt engineering (crafting tailored inputs for LLM to guide generated output) and fine-tuning (further training LLM to fit a particular domain or a problem) outlined in Dr. Kim’s article can further improve LLM responses.

“Generative LLMs are a cool tool you can play with,” Dr. Kim said. “You don’t need advanced coding knowledge—it’s very accessible.”

For More Information

Access the RadioGraphics article, “Optimizing Large Language Models in Radiology and Mitigating Pitfalls: Prompt Engineering and Fine-tuning,” and the related commentary, “Reflections on Prompt Engineering and Generative Artificial Intelligence in Radiology.”

Read previous RSNA News stories on large language models: