AI Helps Radiologists Spot More Lesions in Mammograms

Eye tracking data reveals how AI alters radiologists' visual search patterns

AI improves breast cancer detection accuracy for radiologists when reading screening mammograms, helping them devote more of their attention to suspicious areas, according to a study published in Radiology.

Previous research has shown that AI for decision support improves radiologist performance by increasing sensitivity for cancer detection without extending reading time. However, the impact of AI on radiologists’ visual search patterns remains underexplored.

To learn more, researchers used an eye tracking system to compare radiologist performance and visual search patterns when reading screening mammograms without and with an AI decision support system.

The system included a small camera-based device positioned in front of the screen with two infrared lights and a central camera. The infrared lights illuminate the radiologist’s eyes, and the reflections are captured by the camera, allowing for computation of the exact coordinates of the radiologist’s eyes on the screen.

“By analyzing this data, we can determine which parts of the mammograms the radiologist focus on, and for how long, providing valuable insights into their reading patterns,” said the study’s joint first author Jessie J. J. Gommers, MSc, from the Department of Medical Imaging, Radboud University Medical Center in Nijmegen in the Netherlands.

AI Guides Radiologists to Suspicious Areas

In the study, 12 radiologists read mammography examinations from 150 women, including 75 with breast cancer and 75 without.

Breast cancer detection accuracy among the radiologists was higher with AI support compared with unaided reading. There was no evidence of a difference in mean sensitivity, specificity or reading time.

“The results are encouraging,” Gommers said. “With the availability of the AI information, the radiologists performed significantly better.”

Eye tracking data showed that radiologists spent more time examining regions that contained actual lesions when AI support was available.

“Radiologists seemed to adjust their reading behavior based on the AI’s level of suspicion: when the AI gave a low score, it likely reassured radiologists, helping them move more quickly through clearly normal cases,” Gommers said. “Conversely, high AI scores prompted radiologists to take a second, more careful look, particularly in more challenging or subtle cases.”

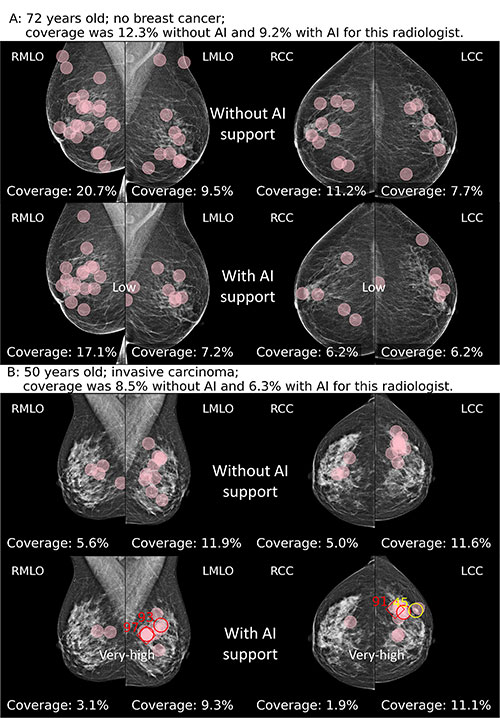

(A) Fixations (pink circles) of a radiologist while reading without and with AI support on a screening mammogram in a 72-year-old woman without breast cancer. The radiologists did not recall the woman in either reading condition. The AI tool classified this examination as low risk, with a maximum region score under 40. (B) Fixations of a radiologist while reading without and with AI support on a screening mammogram in a 50-year-old woman with invasive carcinoma. The radiologist recalled this woman in both reading conditions. The AI tool classified this examination as very high risk, with a maximum region score of 80 or higher (red numbers; yellow number represents intermediate risk). Diamonds indicate calcifications, and circles denote soft tissue lesions.

https://doi.org/10.1148/radiol.243688 ©RSNA 2025

The AI’s region markings functioned like visual cues, Gommers said, guiding radiologists’ attention to potentially suspicious areas. In essence, she said, the AI acted as an additional set of eyes, providing the radiologists with additional information that enhanced both the accuracy and efficiency of interpretation.

“Overall, AI not only helped radiologists focus on the right cases but also directed their attention to the most relevant regions within those cases, suggesting a meaningful role for AI in improving both performance and efficiency in breast cancer screening,” Gommers said.

Gommers noted that overreliance on erroneous AI suggestions could lead to missed cancers or unnecessary recalls for additional imaging. However, multiple studies have found that AI can perform as well as radiologists in mammography interpretation, suggesting that the risk of erroneous AI information is relatively low.

To mitigate the risks of errors, Gommers said, it is important that the AI is highly accurate and that the radiologists using it feel accountable for their own decisions.

“Educating radiologists on how to critically interpret the AI information is key,” she said.

The researchers are currently conducting additional reader studies to explore when AI information should be made available, such as immediately upon opening a case, versus on request. Additionally, the researchers are developing methods to predict if AI is uncertain about its decisions.

“This would enable more selective use of AI support, applying it only when it is likely to provide meaningful benefit,” Gommers said.

For More Information

Access the Radiology article, “Influence of AI Decision Support on Radiologists’ Performance and Visual Search in Screening Mammography,” and the related editorial, “Behavioral Research Will Help Us Understand When AI Will Help Radiologists.”

Read previous RSNA News stories about breast imaging: