Advanced AI Expands Possibilities of Chest X-Ray Classification

Deep learning model performs comparably to state-of-the-art models

CXRs are one of the most common imaging studies, with more than 830 million performed annually worldwide. Significant effort has been invested in developing AI-based deep learning (DL) models to detect CXR abnormalities. However, developing AI for CXRs is challenging as it requires extremely large hand-labeled training datasets and the ability to generalize to different populations and institutions.

“Generating these big databases takes a lot of time,” said study co-author Mozziyar Etemadi, MD, PhD, research assistant professor in Anesthesiology and Biomedical Engineering at Northwestern Medicine in Chicago. “You must extract data from a clinical system that might include hundreds of thousands of patient records, and you must meticulously remove all patient identifiers for privacy reasons. This is all before you even start to think about labeling the data by hand.”

Dr. Etemadi collaborated with researchers at Google to develop a much faster process for developing a CXR classification model, requiring significantly less data and labels.

The new method works by converting CXR images into information-rich numerical vectors that can be used to train models more easily for specific medical prediction tasks such as a clinical condition or patient outcome.

“The computer sees CXRs as a series of numbers,” said Andrew Sellergren, a software engineer at Google Health, who works on a team that is devoted to using machine learning to help solve health care challenges. “Our model takes those millions of numbers and compresses them to a much smaller array of numbers on the order of a few thousand, which makes model development significantly easier. Yes, there is some loss of information with this technique, but the hope is to retain and even amplify the information most relevant to classification and diagnosis, similar to how a radiology report aims to summarize an image.”

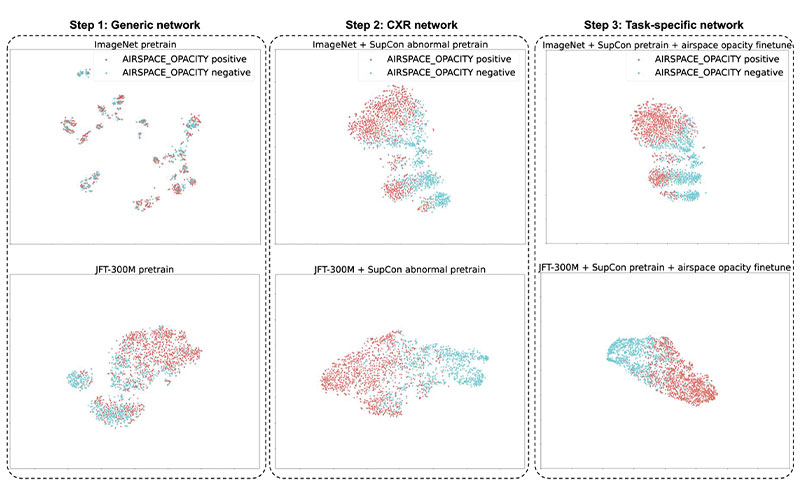

The t-distributed stochastic neighbor embedding visualizations of the embeddings at each step in our three-step training setup. The supervised contrastive (SupCon) embeddings produced a better visual separation of the classes (middle) than the generic pretrained network (left) and as good a separation as a fully fine-tuned network (right). Note that this visualization technique leverages highly nonlinear axes, so neither axis can be assigned readily interpretable units. CXR = chest radiography, ImageNet = data set containing natural images, JFT-300M = larger data set containing natural images.

https://doi.org/10.1148/radiol.212482 ©RSNA 2022

AI Helped Reduce Number of Dataset Images Needed

To facilitate the model’s development, the researchers used supervised contrastive (SupCon) learning, a training methodology that builds on self-supervised learning techniques.

“With SupCon, we are bringing together images that are similar to each other and pushing apart images that are different,” Sellergren said. “In this context, you can do a deep dive into normal images that are grouped together; within the abnormal space, you can dive even deeper in this compressed space.”

Sellergren, Dr. Etemadi, and colleagues studied the approach for 10 CXR prediction tasks, including fracture, tuberculosis and COVID-19 outcomes. Compared to transfer learning from a non-medical dataset, SupCon reduced label requirements across most tasks up to 688-fold, and improved area under the receiver operating characteristic curve (AUC) at matching dataset sizes. At the extreme low-data end of the spectrum, training models using only 45 CXRs yielded an AUC of 0.95 in classifying tuberculosis, a performance similar to that of radiologists.

“Usually, models require hundreds of thousands or even millions of hand-labeled chest X-rays,” Dr. Etemadi said. “We’ve got a model that worked really well with only 45 X-rays, meaning that with this new approach you can reduce the CXR requirement by many orders of magnitude.”

Dr. Etemadi emphasized that the results are not directly clinically applicable. Instead, they represent a milestone for efficient engineering, unlocking the ability to rapidly train chest X-ray models on smaller datasets or when data is scarce.

“It’s all about trying to make the process of generating AI algorithms faster so that we can do it more often and can more expeditiously and efficiently incorporate the technology into real-time clinical care,” Dr. Etemadi said.

Benefits of Collaboration Between Medicine and Engineering

The researchers want to expand their studies beyond CXRs to other imaging modalities. They also plan to raise awareness of the importance of education around the technology, as there are different engineering best practice standards compared with traditional AI work.

Both Dr. Etemadi and Sellergren attribute the success of the research to the collaboration between engineers, radiologists and other physicians.

“The best way forward is to open up for listening on both sides,” Sellergren said. “We’ve had an opportunity to put something out there and start a conversation, and hopefully we’ll hear good things about it, along with some ways to improve it.”

For More Information

Access the Radiology study, "Simplified Transfer Learning for Chest Radiography Models Using Less Data."

Read previous RSNA News stories on AI: